Teaching anyone properly about Climate Change is a difficult task. The concept is simple to grasp: “if the global temperature rises above 2°C in 2100 – that’s bad!” But understanding the sophisticated climate models that scientists develop and translating this understanding into political negotiations, that’s a tough challenge. The World Climate Simulation, made available by the MIT think tank spin-off Climate Interactive, and facilitated by Prof. Dr. Florian Kapmeier (ESB Business School, Reutlingen University) for MTEC faculty and students on March 19th 2018, did just that. Here are some personal reflections.[1]

Source: Getting to 2°. Emotions (and temperatures) run high in a mock climate negotiation. by Robin Kazmier, SM ’17, MIT Technology Review, August 16, 2017

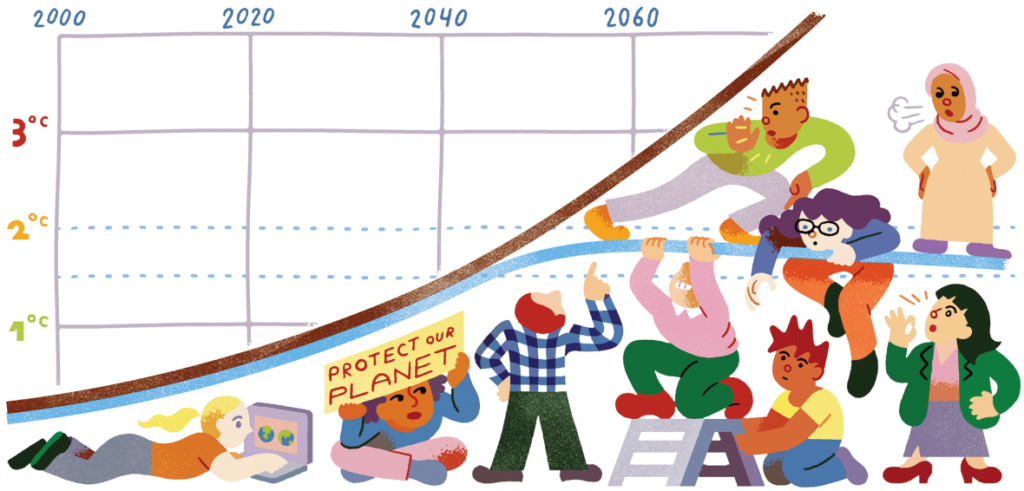

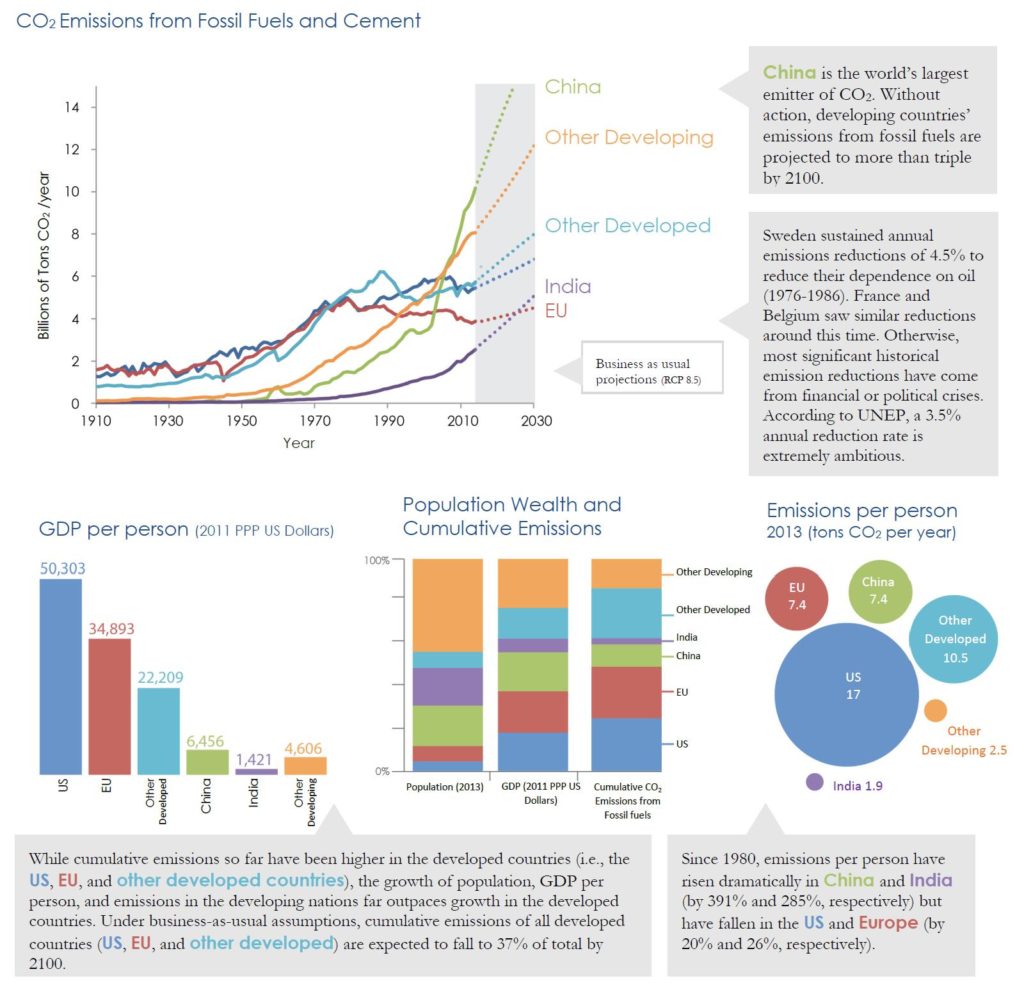

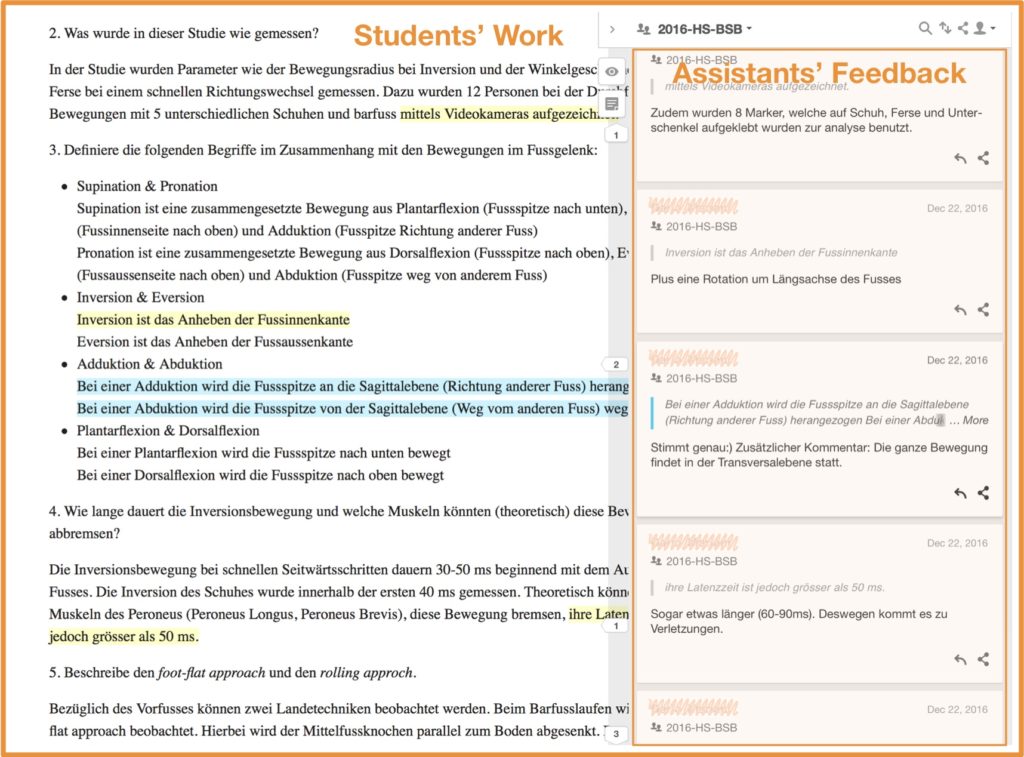

The scenario is as follows: at the next United Nations Climate Change Conference, the UN Secretary General (enacted by the simulation’s facilitator) asks the participating countries and country blocs to make pledges to curb the negative effects of climate change. USA and Europe get seats at lushly decorated conference tables, stocked with privileges and amenities: coffee machines, food, fruits, and soft drinks. Other developed countries like Russia, Canada etc. find themselves at sparsely equipped conference furniture, but they still get a few sandwiches. China and India both get nothing but some water on their tables, while the large bloc of developing countries face a blunt reality: no food, no water, no chairs, no table. The unequal distribution of wealth across the nations becomes clearly visible at the beginning of the game.

At the sidelines and without voting powers, fossil fuel lobbyists, climate activists, and a delegation of US cities and states, the US Climate Alliance, complete the line-up. The simulation can easily accommodate 60 participants; we played it with 20 and without the fossil fuel activists.

Figure 1 Impressions from the WCS negotiations

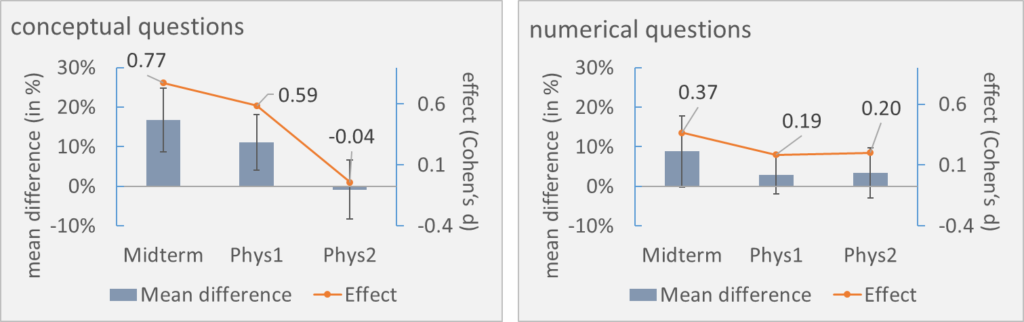

Equipped with brief profile information for the participants that summarize their respective positions in the climate negotiations, a first round of negotiations starts. The countries give a two-minute statement in the UN assembly. Their pledge contains concrete numbers: the year their emissions peaks, the year the reduction of emissions begins, the rate of yearly reduction, and percentage numbers for the prevention of deforestation and afforestation efforts. And then, money talks: how much will the regions contribute to the global fund for mitigation and adaptation to climate change in billions USD per year? These variables from all six countries and country blocs are put on a flipchart.

The US delegation opted for realpolitik in the spirit of pulling out of the Paris climate agreement. Climate change is fake news, hence: no contributions. The EU delegation pledged their green agenda, but tied their contributions to the fund with deal breakers: China, India, and the developing nations have to aim for ambitious goals to curb climate change. Which they didn’t. China argued that the causes for the current situation are rooted in the American and European centuries of industrialization; therefore, it is a European and American responsibility to fix the mess. Likewise, India’s delegation saw prospects of their nation’s industrial development. The developing nations sought to catch up economically and would need to produce enough food for their population. Actually seeing the abundance of food in the “first world” and growing increasingly hungry (having skipped lunch) did not lead to appeasement. The Climate Alliance’s meagre donation of grapes rather accentuated their grievances.

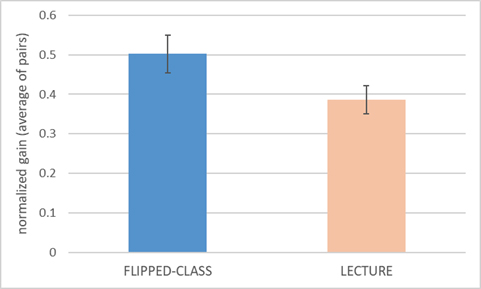

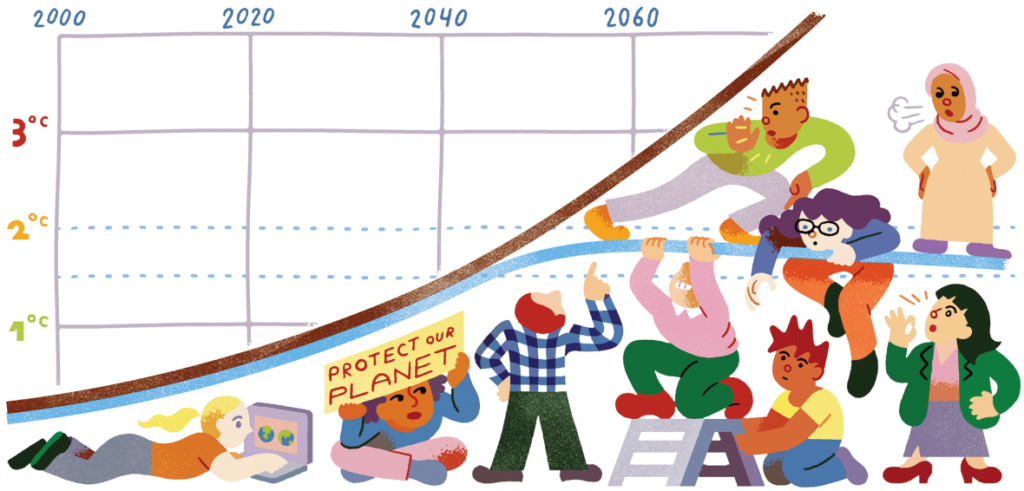

A political solution to the climate change negotiations seemed far away. Having given their pledges, participants voted on the expected result for global warming in 2100. Would it be business as usual with its foreseeable catastrophic events of more than 4°C rise in global temperatures? Or would the pledges lead to outcomes around 3.6°C or even approach the ambitious aim of 2°C global warming in 2100?

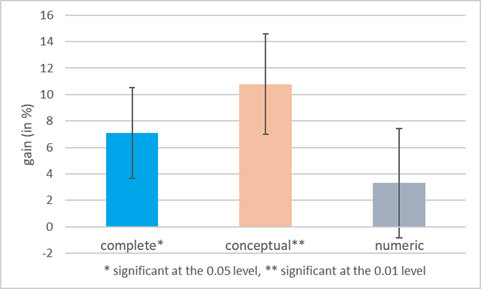

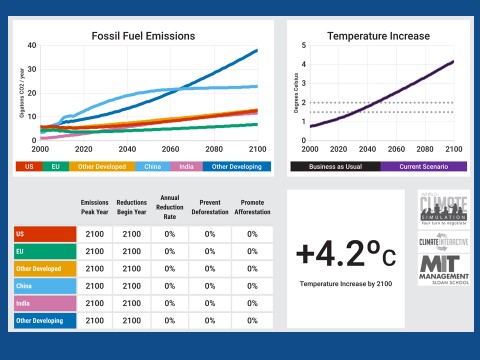

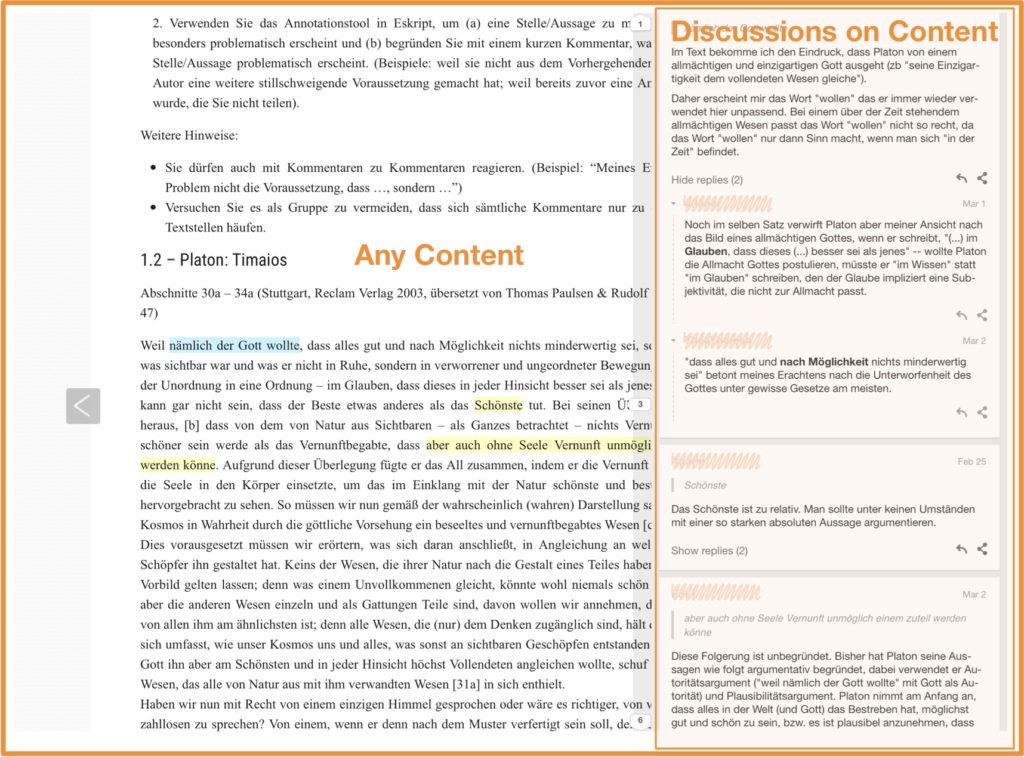

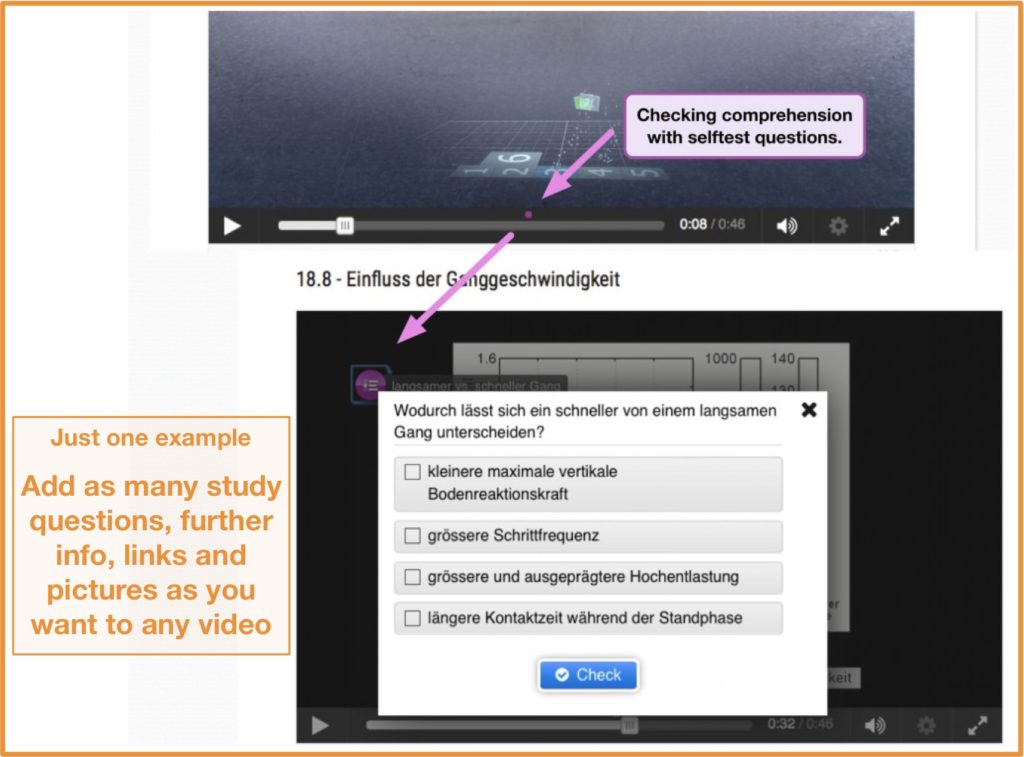

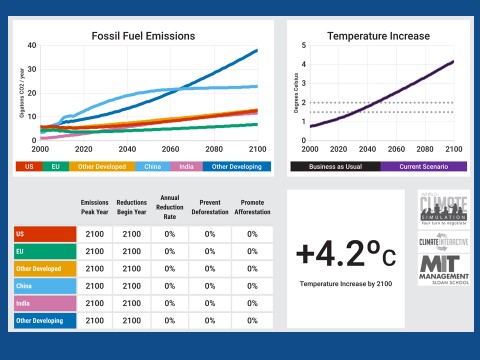

Pessimism flooded the room as the numbers were punched into C-ROADS. C-ROADS (Climate Rapid Overview and Decision Support) is a scientifically-reviewed policy simulator on climate change, with which users can test their own emission pathways to limit global warming to below 2°C and thus learn for themselves. The results are calculated in real-time and give a direct visual output on the effects on global warming (temperature), ocean acidification, and sea-level-rise. A screenshot is given in figure 2.

Figure 2 – Screenshot of the C-ROADS simulator

Result of the first round of negotiations: somewhere around 3.6°C rise in temperature. The UN Secretary General took the outcome to give a passionate input to the conference participants of what this would mean in reality: flooded coastal regions all over the world, with an uninhabitable Shanghai, and foreseeable catastrophic weather conditions with ever stronger and more frequent tropic storms.

The second round of negotiations began with Trump walking out and going golfing. India claimed USA’s coffee machine, and the developing nations began looting. They stripped the US delegation of their sandwiches, cookies, and soft drinks, and also took the conference chairs. They left the flowers. The EU, Russia and Canada negotiated as if there is no tomorrow, and China’s delegation opened up to the idea that actually having a tomorrow that is worth waking up to was not too bad after all. The second round of pledges was typed into C-ROADS, and while the result improved upon the first round, it was still far away from the 2°C goal. A sobering outcome.

The ensuing discussion lead to a much deeper understanding of the different factors and their effects on the climate change projections. It is difficult to describe the increased comprehension of the participants for the numbers and the data in the complex climate models. But the questions and attempts to solve the climate dilemma made it clear that the World Climate Simulation succeeds in engaging participants with a truly mindboggling dataset. It accentuates the interdependencies of the different countries and the need to collaborate to reach solutions. In a more striking way, the potential health benefits for people that will accompany a transition away from fossil fuels to renewable energy sources surprised me. To pick just one example: less fossil fuel use means less asthma; treating asthma is expensive, not having to treat it saves money. In a bigger context, and maybe touching the game’s underlying metanarrative, if we simply stop poisoning ourselves with CO2 emissions, we could be ready for big strides into the right direction. But we have to act immediately.

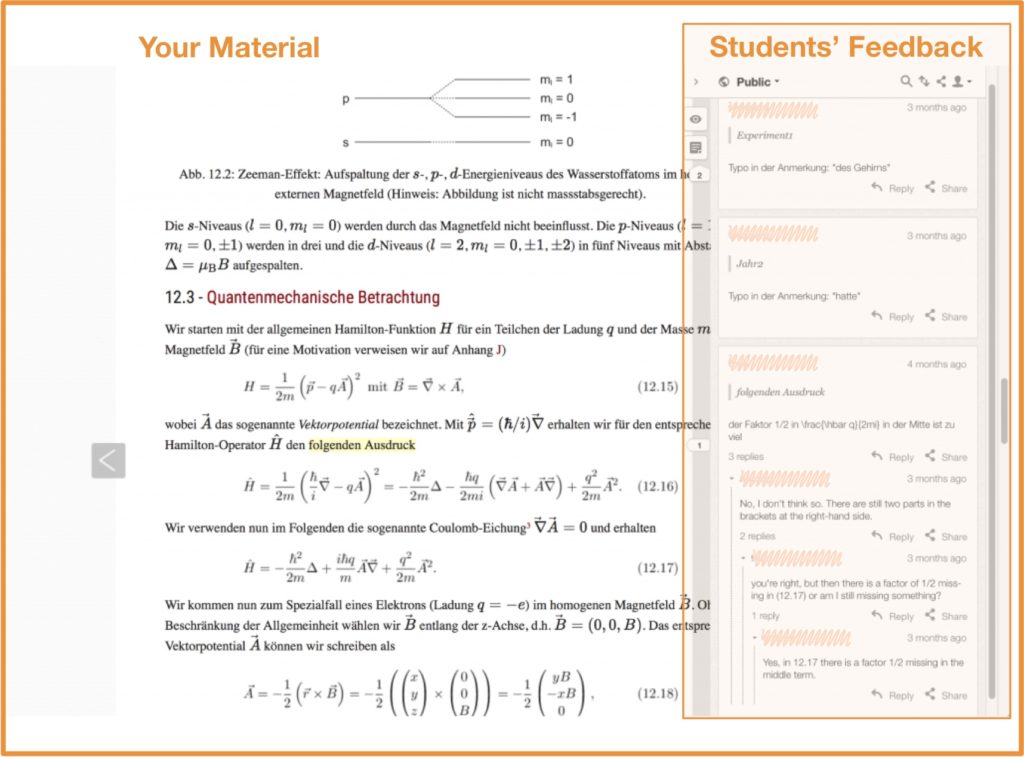

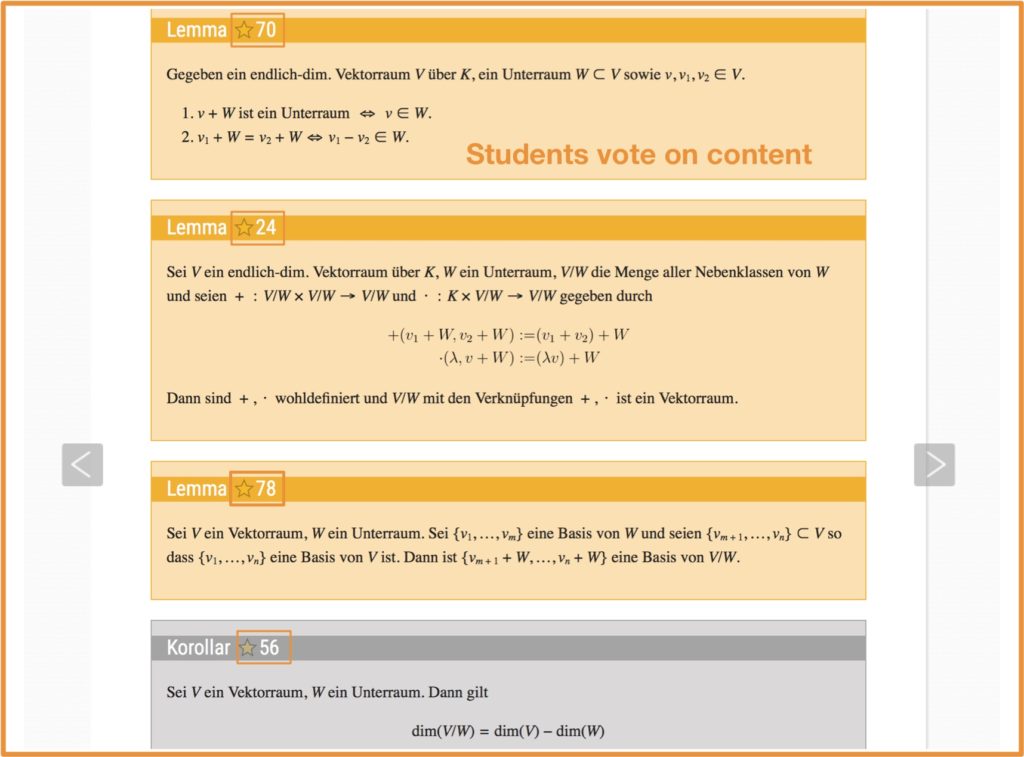

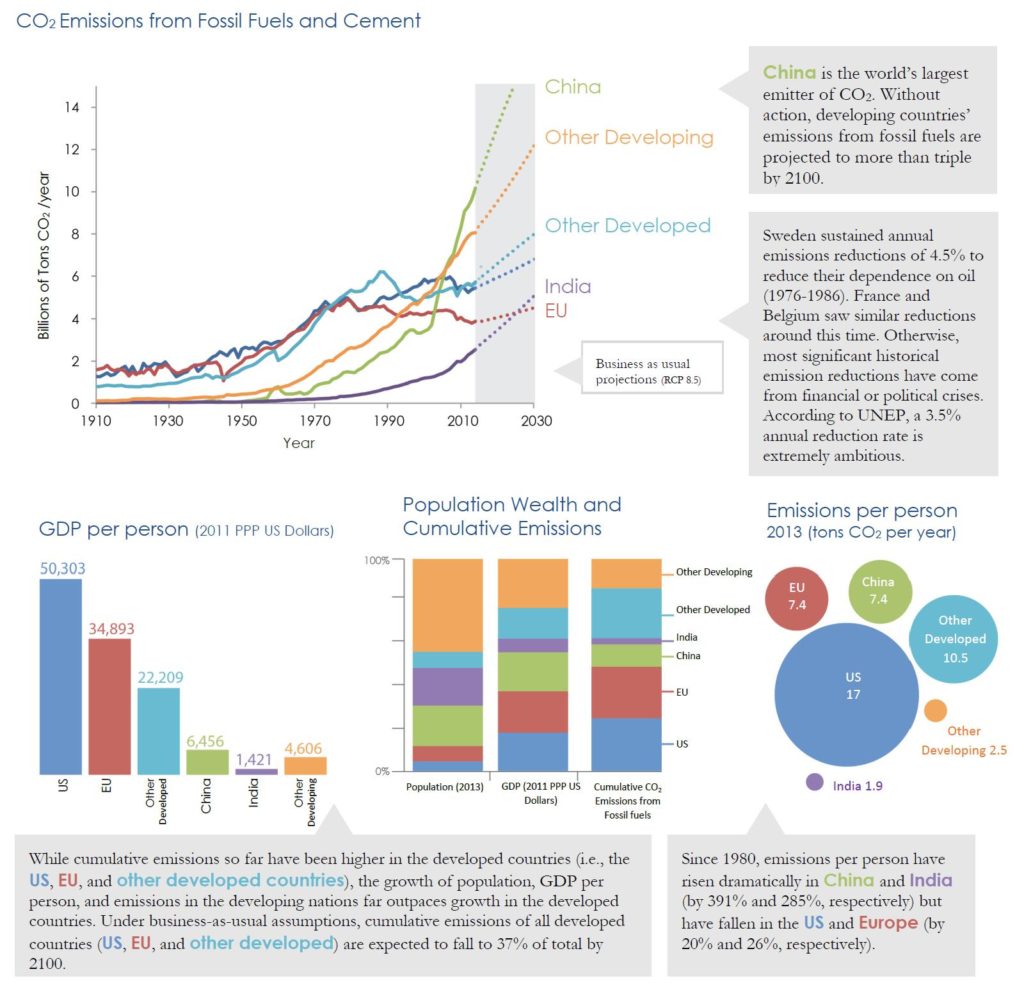

Figure 3 Part of the briefing information for participants

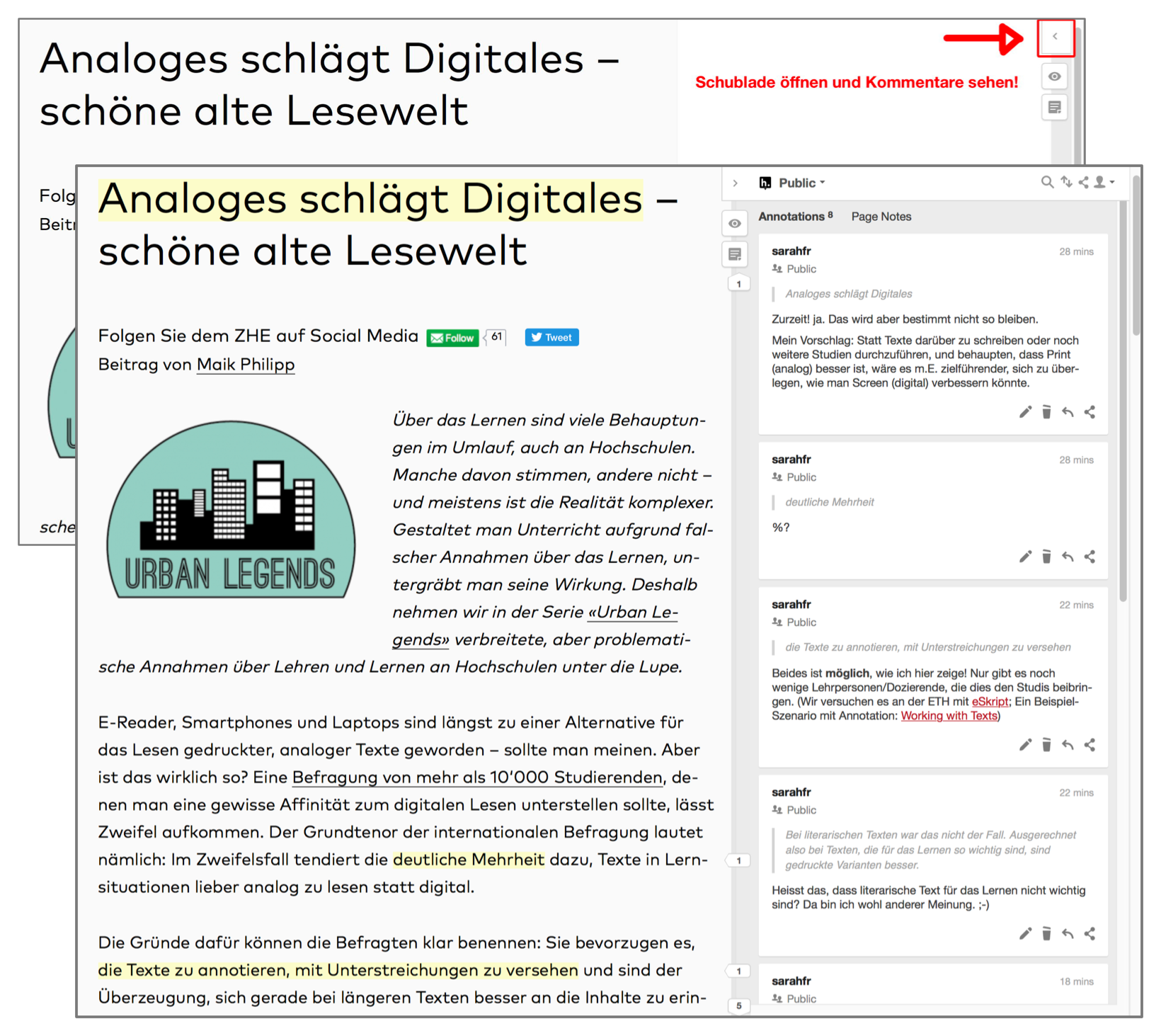

From a didactic point of view, the simulation combines learning about a complex dataset and its interrelated factors with an emotional dimension. Participants play a role, and receive immediate feedback about their negotiation results via the C-ROADS tool. Intense discussions within the countries and country blocs begin to merge with attempts to collaborate across parties. Concepts about climate change, including false concepts, are addressed in a constructive way that allow participants to model and adapt their decision-making to what they learn. It’s a powerful learning and teaching format.

If you want to play the WCS, I believe that the simulation needs a good facilitator to regulate the game dynamics and deliver the Secretary General’s content-heavy input. The teaching notes are very well prepared (see figure 3 for example) and should make it possible for anyone to organize the WCS for the first time. The simulation (in the setting that we played) requires a time slot for about 3.5h-4h. It then gives enough time for a debriefing to summarize the learning experience and let participants reflect on their next steps individually. We had faculty from all levels (Prof, Postdoc, PhD) and master students learning together as participants in the simulation, which added a new opportunity to meet in the department. It was a great learning opportunity!

Information on the simulation, including the full set of slides and materials to play the simulation (also in other languages including German, French, and Italian):

https://www.climateinteractive.org/programs/world-climate/

C-ROADS can be downloaded for free here:

https://www.climateinteractive.org/tools/c-roads/

[1] The event was organized by Johannes Meuer (SusTec) and Erik Jentges (MTEC Teaching Innovations Lab). A huge “thank you!” to Florian Kapmeier for an energetic and passionate facilitation of the simulation.

Seit mehreren Jahren wird

Seit mehreren Jahren wird