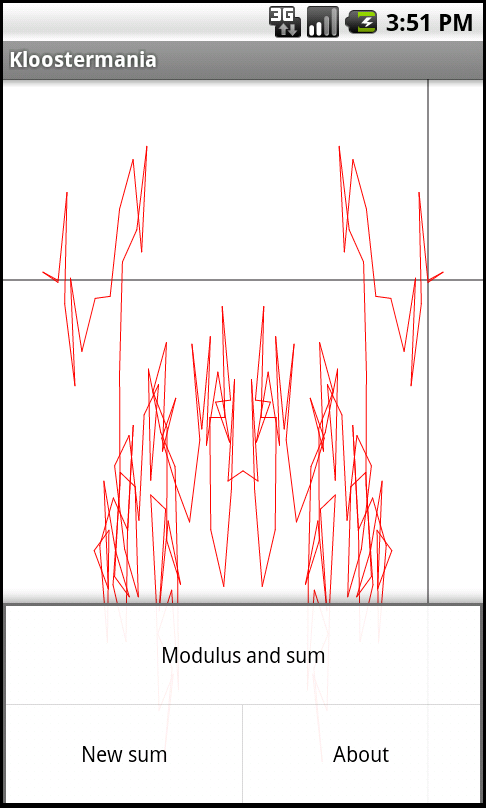

I’m continuing preparing my notes for the course on exponential sums over finite fields, and after the fourth moment of Kloosterman sums, I’ve just typed the final example of “explicit” computation, that of the Salié sums, which for the prime field Z/pZ are given by

(the (x/p) being the Legendre symbol, and e(z)=exp(2iπ z) as usual in analytic number theory). These look suspiciously close to Kloosterman sums, of course, but a surprising fact is that they turn out to be rather simpler!

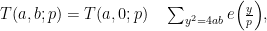

More precisely, we have the following formula if a and b are coprime with p (this goes back, I think to Salié, though I haven’t checked precisely):

the inner sum running over the solutions of the quadratic equation in Z/pZ. The first term is simply a quadratic Gauss sum, which is well-known to be of modulus

and since the inner sum contains either 0 or 2 terms only, depending on the quadratic character of a modulo p, we get trivially the analogue

of the Weil bound for Kloosterman sums.

The standard proof of this formula is due to P. Sarnak. It is the one which is reproduced in my earlier course on analytic number theory and in my book with H. Iwaniec. However, since it involves a somewhat clever trick, I tried a bit to find a more motivated argument (motivated does not mean motivic, though one can certainly do it this way…).

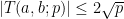

I wasn’t quite successful, but still found a different proof (which, of course, is very possibly not original; I wouldn’t be surprised, say, if Sarnak had found it before the shorter one in his book). The argument uses a similar trick of seeing the sum as value at 1 of a function which is expanded using some discrete Fourier transform, but maybe the function is less clever: it is roughly

instead of something like

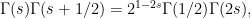

(and it uses multiplicative characters in the expansion, instead of additive characters). It’s a bit longer, also less elementary, because one needs to use the beautiful Hasse-Davenport product formula: denoting

the Gauss sums associated with multiplicative characters, we have

which is the analogue for Gauss sums of the duplication formula

for the gamma function. Since this formula is most quickly proved using Jacobi sums (the analogues of the Beta function…), which I had also included in my explicit computations of exponential sums, using this argument is a nice way to make the text feel nicely interlinked and connected. And it’s always a good feeling to use a proof which is not just the same as what can be found already in three of four places (at least when you don’t know those places; for all I know, this may have been published twenty times already).

Now, you may wonder what Salié sums are good for. To my mind, their time of glory was when Iwaniec used them to prove the first non-trivial upper bound for Fourier coefficients of half-integral weight modular forms (this is the application Sarnak included in his book), which then turns out to lead quite easily (through some additional work of W. Duke) to results about the representations of integers by ternary quadratic forms. Another corollary of Iwaniec’s bound, through the Waldspurger formula and Shimura’s correspondance, was a strong subconvexity bound for twisted L-functions of the type

where f is a fixed holomorphic form and χ is a real Dirichlet character, the main parameter being the conductor of the latter.

The point of Iwaniec’s argument was that the Weil-type bound, when applied to the Fourier coefficients of Poincaré series, which can be expressed as a series of Salié sums

(with fixed m and n) in the half-integral weight case, just misses giving a result. So one must exploit cancellation in the sum over those Salié sums, i.e., as functions of the modulus c. This is hopeless, at the moment, for Kloosterman sums, but the semi-explicit expression for the Salié sums in terms of roots of quadratic congruences turns out to be sufficient to squeeze out some saving…

(Nowadays, there is a wealth of techniques to directly prove subconvexity bounds for the twisted L-functions — e.g., in this paper of Blomer, Harcos and Michel –, and one can run the argument backwards, getting better estimates for Fourier coefficients from those; as is well-known, one finds this way that the “optimal” bound for the Fourier coefficients is equivalent with a form of the Lindelöf Hypothesis for the special values…)